Jupyter notebooks edited in JupyterLab are my tool of choice when working with and exploring data in Python.

I frequently mature code stored in notebooks to importable .py files and further to stand-alone Python packages.

I recently read the “Everything Gets a Package” post

where Ethan Rosenthal describes his data science project setup.

This led me to rethink how I manage virtual environments, dependencies and JupyterLab installations across projects.

Here, I will share my current process for setting up development environments on my local machine or a remote server I work on. This approach solves three main problems for me:

- Use and manage one JupyterLab installation across projects with useful JupyterLab extensions installed. Before adopting this process, each project I worked on came with its own JupyterLab which kept me from customizing and tweaking JupyterLab to maximize efficiency for every single project.

- Graduating code from a notebook to a

.pyfile to a package should be easy (this is the central point in Ethan’s post which is essentially achieved usingpoetryfrom the start). - Of course, projects and tools used in my workflow should be separated into virtual environments.

If this sounds interesting, read on. But please also note that my approach will certainly change as months and years go by. I will share updates on this website.

Tools

Here is a short introduction to the tools used in my process. A detailed description is out-of-scope here, just follow the links to learn more:

pyenv(link) - simple and transparent management and installation of different Python versions.pyenv virtualenv(link) - management of virtual environments as a plugin forpyenv.pipx(link) - system-wide installation of Python applications in their own environments.poetry(link) - dependency management for Python projects and applications;poetryis installed usingpipx.JupyterLab(link) - browser-based user interface to write Python code, interact with datasets, visualize them and much more.JupyterLabextensions - I do not want to point out specific extensions here (that could be a later post) but there are many, see, e.g., list 1 and list 2ipykernel(link) - IPython as a kernel for Jupyter; I am using it to register project-level virtual environments as kernels in Jupyter.

Approach

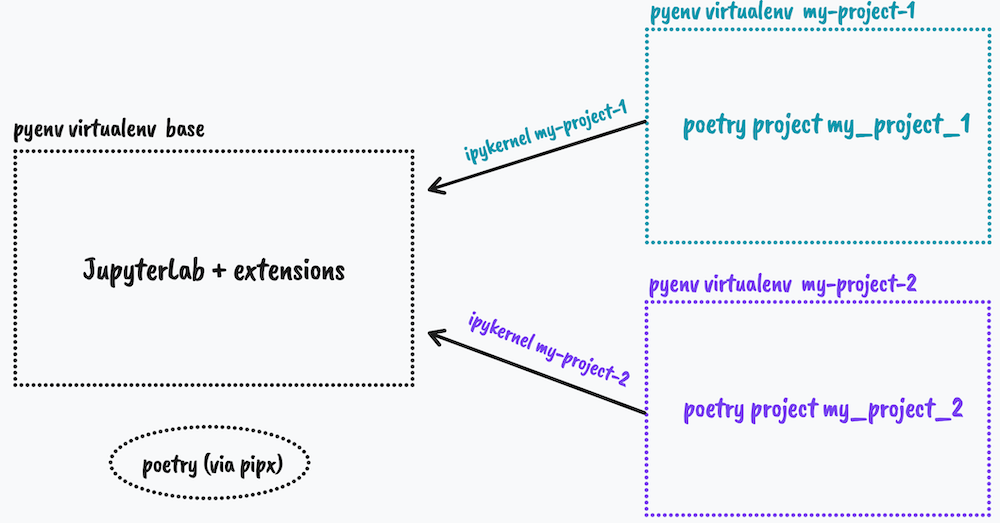

My setup currently looks like this:

The most important ideas are:

- I have a virtual environment called ‘base’ running JupyterLab and all extensions. The ‘base’ environment is created using

pyenv virtualenvonce and activated whenever I open a terminal (by editing my.zshrc). - Each project lives in in its own virtual environment manged by

pyenv virtualenv. - Each project is a

poetryproject andpoetryis used to install dependencies. ipykernelis used to register each project’s virtual environment as a kernel in the Jupyter instance living in the ‘base’ environment.

Code

When starting a new project, I go through the following steps.

Before getting started, run the following once to switch off poetry’s built-in virtual environment management:

poetry config virtualenvs.create false.

mkdir my-project # create a project directory

cd my-project

pyenv virtualenv my-project # create the project virtual env

pyenv activate my-project # activate the virtual env

poetry init # initialize the poetry project

poetry add spacy # install dependencies

poetry add --dev black ipykernel # install dev dependencies

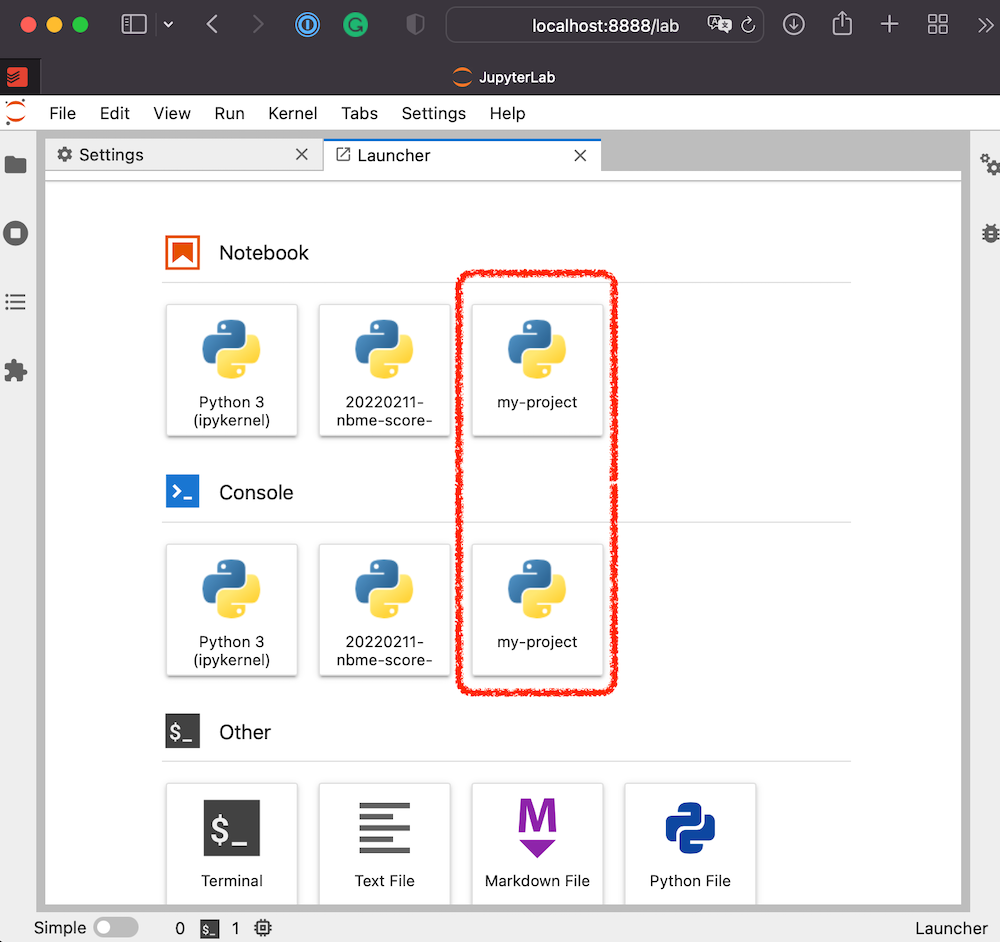

python -m ipykernel install --user --name my-project # register project kernel

git init # initialize version control

git add pyproject.toml poetry.lock # add files produced by poetry

# commit, track more files, push to a remote repo

Besides setting up the project directory and virtual environment, this registers the my-project kernel in the JupyterLab installation living in the ‘base’ environment:

Note that the commands above do not set up the ‘base’ environment - let me know if that would be of interest. For installing the tools used, refer to the links listed above.

Caution

My approach has a few caveats:

- Upgrading Python or JupterLab in the base environment could become messy when breaking changes occur. Only time will tell how big of a problem this really is in practive. However, all project code and dependencies is and will be self-contained and reproducible - that’s what really matters.

- There a quite a few tools at play here - these will all be updated or replaced by newer ones. There is no way around embracing change here.

- Although running Python under Windows got much easier recently, I would be surprised if all this worked out of the box outside Linux or MacOS (let me know if it does!). So be careful/patient when trying to adapt this process on a Windows box!

End

Thanks for reading and do let me know if you have any feedback or suggestions for further improvements.