Short: RAFT

RAFT: Adapting Language Model to Domain Specific RAG

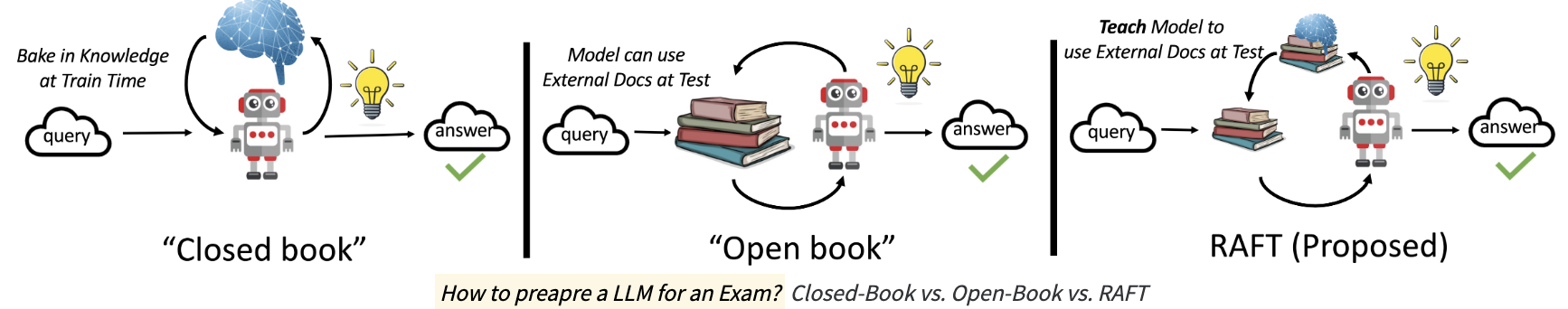

From the Gorilla LLM project, Retrieval Aware Fine-Tuning (RAFT) combines retrieval-augmented generation and fine-tuning to adapt language models to domain-specific knowledge.

Blog post: here

Paper: here

Why

Retrieval Augmented Generation (RAG) and fine-tuning are two of the most important concepts in the NLP domain when it comes to exposing large language models to recent, domain-specific information.

The Retrieval Aware Fine-Tuning (RAFT) model is a combination of both of these concepts and generalizes Retriever Aware Training (RAT).

What

Image from the blog post here:

The core ideas are:

- supervised finetuning (SFT) on positive and negative context documents

- chain-of-though finetuning, quoting segments from the context

- force model to memorize domain knowledge and disregard irrelevant documents by sometimes removing positive documents from SFT samples

So what?

- RAFT achieves better performance on domain-specific open book tasks than RAG or SFT alone when using a small, openly available model (Llama2-7B)

- But: the RAFT paper contains no comparison against GPT4-class models (my intuition is that GPT4-class models outperform RAFT, especially in a RAG scenario)